Table of Contents

When clearing out cobwebs in an old part of a project (especially one I didn’t write), it’s easy to get carried away. I often catch myself thinking, “I wish I had time to rewrite this whole thing.”

Engineers hate tech debt. At every CTO, VP, or director Q&A I’ve attended, an IC asks about how much time will be dedicated to paying it down in the next quarter or year. The answer is always unsatisfactory.

The unspoken reason tech debt payments are so hard to get sign-off on is clear1: they provide little quantifiable value to the business. From the top-down, playing it safe is preferred.

Refactors are a hard abstraction problem. Sometimes they are necessary, just like technical debt.

Wanting to tear down and rebuild is a common impulse. Once in a while, somebody cares enough to borrow the time to do it. Total refactors, while incorporating an existing feature set in a more elegant way, can be shortsighted. Here’s how they usually go down:

- An initial solution is developed

- Feature creep from building takes place

- A refactor takes place to abstract or generalize the solution

- Repeat steps 2-3 for the life of the project

This cycle is inherent in creating any system. What matters is how long the cycle lasts. Keeping this cycle too short or too long is expensive:

- Too long —> technical debt

- Too short —> overfitting

I find that the technical debt problem is well understood and the overfitting problem, exacerbated by avoidable refactors, is not.

Overfitting

You can think of refactors like database or Django migrations. Refactors are a way to keep the codebase in sync with business problems. The longer you wait to do them, the more of a headache they become, but a large, superfluous migration can be dangerously expensive.

A refactor is a functionally equivalent solution that incorporates ’’ features into a new solution that treats all features as first-class citizens, where is all features added before the last refactor or architecture plan.

This leads to a new set of problems. Each time new features are added to the solution, the scope must be broadened. The more you try to generalize, the more you overfit2. The more you overfit, the more you have to refactor.

It follows that unnecessary refactors lead to unnecessary overfitting. It’s important to avoid refactoring as the new guy or overzealous engineer.

Basic Real-World Example

Traffic systems are a hard problem. This example is oversimplified. Say we had a traffic control system that handled a single intersection. We might start with a simple solution involving a single traffic light. Soon we will realize that we need to handle multiple lanes of traffic. We might refactor to a solution that handles multiple lights. In the revised solution, the number of lanes and their attributes are codified.

Over time, our model of the problem becomes more complex. We need to handle multiple intersections, pedestrians, and transit lanes. We might refactor into a solution that handles all of these things. Construction costs money and time, so we want to minimize rebuilding and weak optimizations.

This example is fairly contrived, but its principles fit any system with flow and constraints.

Back to Software

In software, product needs are much more fluid than roads and infrastructure; this cycle occurs a lot faster3. Any ground-up refactor will accomplish incorporating new features in a way that feels elegant to the solution, but it’s a local maximum with consequences.

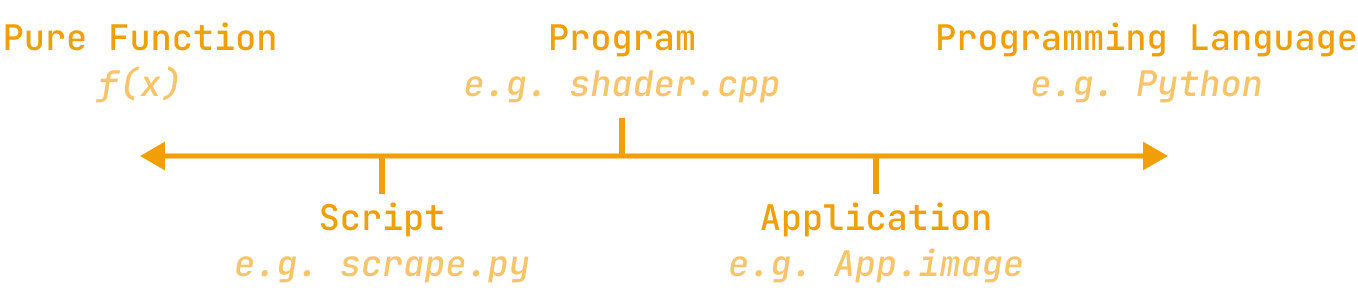

Taking this idea to its limits, the only way to avoid refactors is to have perfect foresight. Often, this is realized as a domain-specific language (DSL) that is expressive enough to handle all the features that will be added in the future4. In implementation, a system robust enough to handle any programmable business need would approximate a programming language. Getting just the right amount of abstraction is always difficult and requires great judgment.

Refactors are reflective only of current and known needs. Modeling real world problems is hard, but complete rewrites lead to premature optimization and overfitting to the current problem set. There is a tendency to refine a working solution to the point of diminishing returns.

Test-driven development is a similar case. The scope must be very well defined (e.g. parsing a CSV) for it to be useful.

Rigid frameworks are great for building on top of rigid platforms. A CMS for a well-defined format (like Markdown), for example, can be a great candidate for a DSL. The problem is well understood and has tight constraints. The same is not true for many projects. Like most systems development, the best solution is underscored by judgment and making the right tradeoff. It is generally better to acknowledge uncertainties instead of ignoring them.

By refactoring too early, you are creating too rigid a solution. The framework that solves the current feature set will inevitably be too narrow for the next one.

Footnotes

-

When you’re at a large firm it always feels good to hear an exec admit the writing is on the wall. Candor is common where I work right now, but there is always something that is not being said. ↩

-

I’m borrowing the term ‘overfitting’ from the problem in machine learning. It occurs when a model is trained to fit a specific set of data too closely. As a result, the model will perform well on the training data, but poorly on new data in the field. ↩

-

It’s not difficult to iterate faster than public works in most industries. It seems like every exciting BART or Caltrain plan is always years out of reach. ↩

-

DSLs are great, but not a silver bullet. The reason migrations exist in the first place is an acknowledgement that a problem is too complex to be solved in one go. ↩